The European police agency, Europol, has requested unfiltered access to data that would be harvested under a controversial EU proposal to scan online content for child sexual abuse images and for the AI technology behind it to be applied to other crimes too, according to minutes of a high-level meeting in mid-2022.

The meeting, involving Europol Executive Director Catherine de Bolle and the European Commission’s Director-General for Migration and Home Affairs, Monique Pariat, took place in July last year, weeks after the Commission unveiled a proposed regulation that would require digital chat providers to scan client content for child sexual abuse material, or CSAM.

The regulation, put forward by European Commissioner for Home Affairs Ylva Johansson, would also create a new EU agency – the EU centre to prevent and counter child sexual abuse. It has stirred heated debate, with critics warning it risks opening the door to mass surveillance of EU citizens.

In the meeting, the minutes of which were obtained under a Freedom of Information request, Europol requested unlimited access to the data produced from the detection and scanning of communications, and that no boundaries be set on how this data is used.

“All data is useful and should be passed on to law enforcement, there should be no filtering by the [EU] Centre because even an innocent image might contain information that could at some point be useful to law enforcement,” the minutes state. The name of the speaker is redacted, but it is clear from the exchange that it is a Europol official.

The Centre would play a key role in helping member states and companies implement the legislation; it would also vet and approve scanning technologies, as well as receive and filter suspicious reports before passing them to Europol and national authorities.

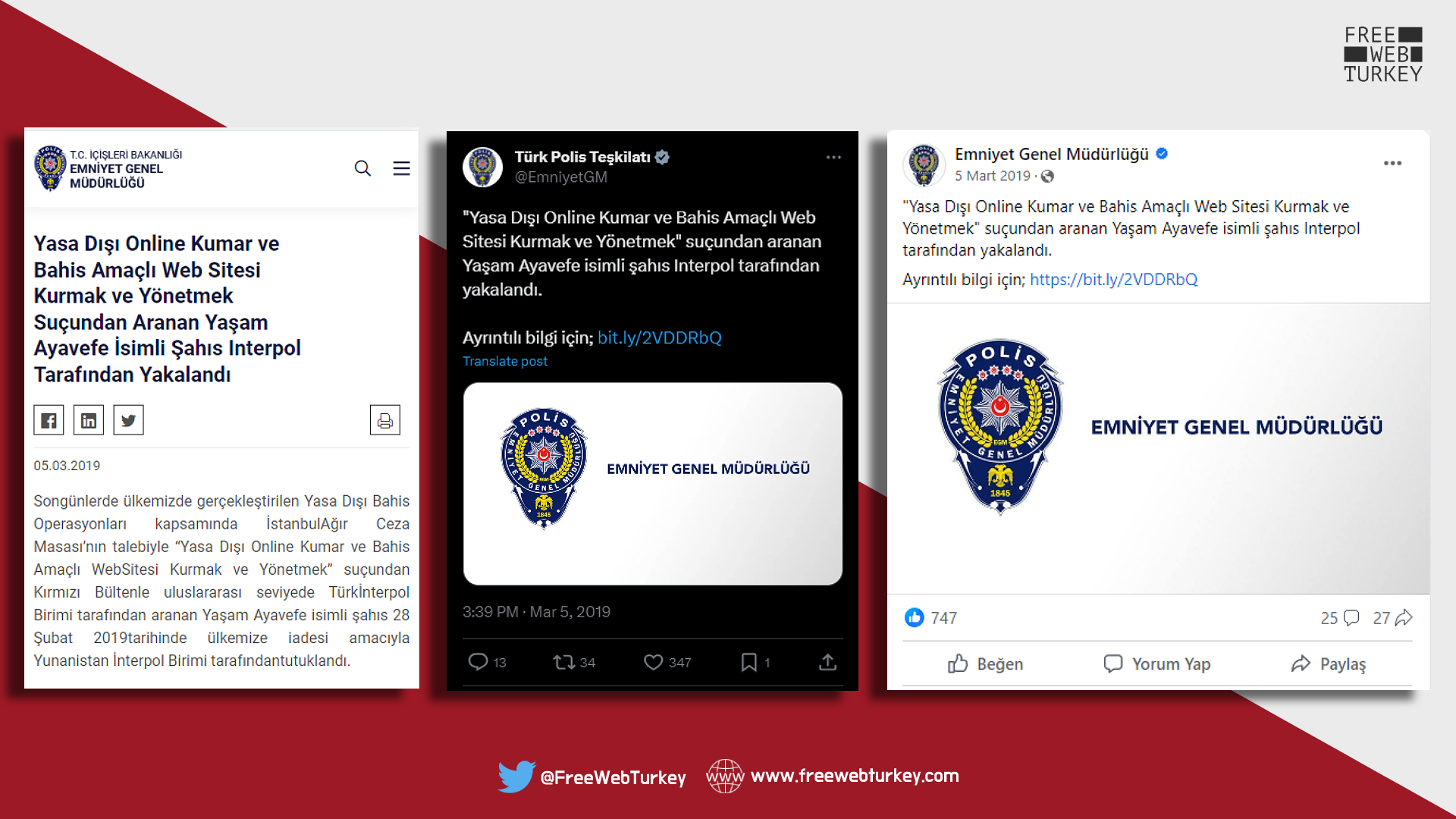

Minutes from the Europol commission obtained by BIRN.

In the same meeting, Europol proposed that detection be expanded to other crime areas beyond CSAM, and suggested including them in the proposed regulation. It also requested the inclusion of other elements that would ensure another EU law in the making, the Artificial Intelligence Act, would not limit the “use of AI tools for investigations”.

The Europol input is apparent in Johansson’s proposal. According to the Commission text, all reports from the EU Centre that are not “manifestly unfounded” will have to be sent simultaneously to Europol and to national law enforcement agencies. Europol will also have access to the Centre’s databases.

Several data protection experts who examined the minutes said Europol had effectively asked for no limits or boundaries in accessing the data, including flawed data such as false positives, or in how it could be used in training algorithms.

Niovi Vavoula, a data protection expert at the Queen Mary University of London, said a reference in the document to the need for quality data “points to the direction that Europol will use the data to train algorithms, which according to the recent Europol reform is permitted”.

Europol’s in-house research and development centre, the Innovation Hub, has already started working towards an AI-powered tool to classify child sexual abuse images and videos.

According to an internal Europol document, the agency’s own Fundamental Rights Officer raised concerns in June 2023 about possible “fundamental rights issues” stemming from “biased results, false positives or false negatives”, but gave the project the green light anyway.

In response, Europol declined to comment on internal meetings, but said: “It is imperative to highlight our organisation’s mission and key role to combat the heinous crime of child sexual abuse in the EU. Regarding the future EU Centre on child sexual abuse, Europol was rightfully consulted on the interaction between the future EU Centre’s remit and Europol. Our position as the European Agency for Law Enforcement Cooperation is that we must receive relevant information to protect the EU and its citizens from serious and organised crime, including child sexual abuse.”

Illustrative photo by Alexas_Fotos Pixabay

Staff links

On September 25, BIRN in cooperation with other European outlets reported on the complex network of AI and advocacy groups that has helped drum up support for Johansson’s proposal, often in close coordination with the Commission. There are links to Europol too.

According to information available online, Cathal Delaney, a former Europol official who led the agency’s Child Sexual Abuse team at its Cybercrime Centre, and who worked on a CSAM AI pilot project, has begun work the US-based organisation Thorn, which develops AI software to target CSAM.

Delaney moved to Thorn immediately after leaving Europol in January 2022 and is listed in the lobby register of the German federal parliament as an “employee who represents interests directly”.

Transfers of EU officials to the private sector to work on issues related to work carried out in their last three years of EU engagement require formal permission, which can be denied if it is deemed that such work “could lead to a conflict with the legitimate interests of the institution”.

In response, Europol said: “Taking into account the information provided by the staff member and in accordance with Europol’s Staff Regulation, Europol has authorised the referred staff member to conclude a contract with a new employer after his end of service for Europol at the end of 2021”.

In June, Delaney paid a visit to his former colleagues, writing on Linkedin: “I’ve spent time this week at the #APTwins Europol Annual Expert Meeting and presented on behalf of Thorn about our innovations to support victim identification.”

Illustrative photo by EPA-EFE/RONALD WITTEK

A senior former Europol official, Fernando Ruiz Perez, is also listed as a board member of Thorn. According to Europol, Ruiz Perez stopped working as Head of Operations of the agency’s Cybercrime Centre in April 2022 and, according to information on the Linkedin profile of Julie Cordua, Thorn’s CEO, joined the board of the organisation at the beginning of 2023.

Asked for comment, Thorn replied: “To fight child sexual abuse at scale, close collaboration with law enforcement agencies like Europol are indispensable. Of course we respect any barring clauses in transitions of employees from law enforcement agencies to Thorn. Anything else would go against our code of conduct and would also hamper Thorn’s relationships to these agencies who play a vital role in fighting child sexual abuse. And fighting this crime is our sole purpose, as Thorn is not generating any profit from the organization’s activities.”

Alongside Ruiz Peréz, on the board of Thorn is Ernie Allen, chair of the WeProtect Global Alliance, WPGA, and former head of the National Centre for Missing & Exploited Children, NCMEC, a US organisation whose set-up fed into the blueprint for the EU’s own Centre.

Europol has also co-operated with WeProtect, a putatively independent NGO that emerged from a fusion of past European Commission and national government initiatives and has been a key platform for strategies to support Johansson’s proposal.

“Europol can confirm that cooperation with the WPGA has taken place since January 2021, including in the context of the WPGA Summit 2022 and an expert meeting organised by Europol’s Analysis Project (AP) Twins (Europol’s unit focusing on CSMA)” the agency said.

This article is part of an investigation supported by the IJ4EU programme, versions of the article are also published by Netzpolitik and Solomon.

Doarsa Kica Xhelili, LDK MP. Photo: BIRN

Doarsa Kica Xhelili, LDK MP. Photo: BIRN