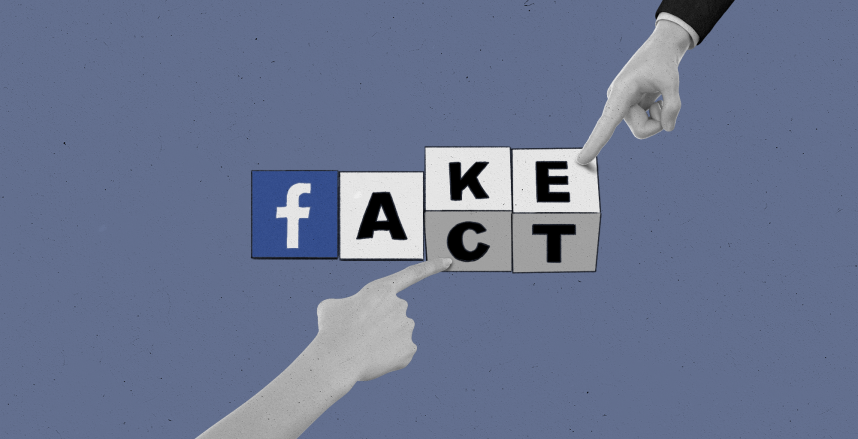

A widespread failure to understand how Meta’s fake news fight is run has left independent fact-checkers in the crossfire. The falsehoods keep coming, often from politicians who are spared the scrutiny.

While such warnings should result in reduced visibility for sites that continue to promote fake news, it is unlikely that content containing false information will actually ever be removed by Meta unless it goes against community standards, such as hate speech.

Small publishers in the Balkans say they are being unfairly punished for relying on pickups that turn out to be false, while fact-checkers find themselves caught in the crossfire, demonised by social media users and media outlets that appear not to understand the real nature of their work while taking the flak for the continued scourge of fake news.

Meta “used us as a scapegoat for why there was still ‘fake news’ out there, instead of taking any responsibility for their own massive role in spreading it and pushing it to the screens of the people they had identified as being most likely to believe it and be motivated to act on it,” said Brooke Binkowski, a former managing editor of Snopes, a fact-checking site that has partnered with Facebook.

BIRN asked Facebook for data concerning content in Balkan languages but received no response. Meta also did not respond to a request for comment on the third-party fact-checking programme, commonly known as TPFC.

System widely misunderstood

Illustration, visitors take picture of billboard sign featuring a new logo and name ‘Meta’ in front of Facebook headquarters in Menlo Park, California, USA, October 2021. Photo: EPA-EFE/JOHN G. MABANGLO

Facebook fact-checking took off following the 2016 election of Donald Trump as US president, when the social networking giant was accused of contributing to deep political polarisation and a failure to clamp down on misinformation designed to manipulate voters.

The work was outsourced to independent media organisations specialised in debunking false reports.

To become a Meta fact-checking partner, an organisation must be a signatory of the International Fact-Checking Network’s Code of Principle, meaning it must have a track record of doing fact-checking in its respective country or countries. With Meta’s own algorithm struggling to detect content that violates community standards in non-English speaking areas, the work of local fact-checkers is seen as essential.

Based on algorithms and user reports, Meta flags to fact-checkers content they should take a look at; fact-checkers can also identify hoaxes on their own.

“Istinomer journalists on a daily level scan the platforms and media outlets in order to find viral potentially false information that could harm and influence citizens’ decision-making, or for which it is in the public interest that they are fact-checked,” said Milena Popovic of Belgrade-based fact-checker Istinomer.rs.

Popovic said that “it is not fully transparent” how Meta identifies potentially false information, but the company itself says it picks up on posts based on a number of signals, including how people are responding and how fast the content is spreading.

During major news events or for topics that are trending, Meta also uses keyword detection to gather related content in one place, making it easier for fact-checkers to find it, but BIRN has reported before on the problems Facebook’s AI has in detecting content that violates its standards in languages other than English.

Once the content has been fact-checked, it receives a rating on Meta’s platforms, from ‘false content’ to content containing ‘some factual inaccuracies’ to content that implies a false claim without stating it directly. Such posts are not removed, but Meta reduces their spread and the advertising earnings that can be made off them.

“The content removed by Facebook is content that does not comply with its advertising policies, content that contains hate crimes, and posts related to terrorism from fake accounts,” said Emre Ilkan Saklica of Turkish fact-checking organisation Teyit. “We have no involvement there.”

Fact-checkers say this is frequently misunderstood, to dramatic effect.

“Fact-checkers do not have the technical or any other ability to remove content or ‘suspend pages’ and this is not at all what TPFC is about,” said Tijana Cvjeticanin from Sarajevo-based Raskrinkavanje.ba.

“Nevertheless, people who make money off spreading disinformation, toxic political propaganda or conspiracy theories (or, in some cases, people who genuinely believe such narratives) often publish outrights lies about the work that we do”, Cvjeticanin told BIRN.

“This has morphed into a form of harassment fuelled by false arguments like the made-up claims that we ‘surveil’ private profiles or that we censor, remove, or ban content on Facebook.”

No right to appeal for personal accounts

Illustration. Photo: EPA-EFE/ANDREJ CUKIC

In a BIRN questionnaire aimed at Meta users in the Western Balkans, Turkey and Greece, of all posts that respondents said were flagged as false, 21 per cent concerned public individuals such as politicians, journalists, celebrities, sportspeople or businessmen; 17 per cent concerned issues related to COVID-19.

Some two thirds of COVID-19 content that was flagged was shared by private individuals, while journalists were behind most flagged content concerning domestic politics and armed conflicts.

Some 15 per cent of flagged content reported by the questionnaire respondents concerned armed conflict, including in Ukraine, Yemen and Syria, while 13 per cent was about domestic politics.

Fact-checkers who spoke to BIRN said it is difficult to single out any one topic that triggers most fake news. But photos were more likely to be flagged than text articles; 41 per cent compared to 30, according to the BIRN questionnaire.

Meta directs fact-checkers to prioritise analysing content about the use or effects of medicine, products or jobs that could risk major financial loss, elections or crises or those targeting a particular ethnic, social or religious group.

One third of respondents said they didn’t remember the exact rating applied to their content; around a quarter said their posts were labelled ‘false’. Under Meta’s rules, most of these people cannot appeal since this is only possible for individuals if the post was shared on a page or within a group, not on personal profiles.

Almost half of respondents said they noticed their posts received less attention after being flagged; in 59 per cent of cases, the posts were later removed, apparently for violating Meta’s community standards.

Politicians spared much scrutiny

Illustration: Unsplash.com/Sergey Zolkin

In terms of media outlets, some appear keen to correct content flagged by fact-checkers given their sensitivity to the hit to their earnings if Facebook reduces their visibility.

Since joining Meta’s fact-checking programme in 2020, Darvin Muric, editor in chief at Raskrinkavanje.me in Montenegro, said the organisation began receiving “a dramatically higher number of corrections.”

“Media started correcting their inaccurate articles themselves and sending requests to correct the rating,” Muric told BIRN. “Media outlets sent us literally hundreds of such corrections, and recently they started sending us corrections for articles that they didn’t even share on Facebook.”

But, said Muric, it’s a different story when it comes to “individual pages, fake profiles, extreme right-wing portals, places designed to spread false news and hate.”

“They don’t use the options of appeal or correction,” he said. “They prefer to launch online campaigns targeting Raskrinkavanje.me editorial staff, journalists, or management.”

Currently, Meta does not apply such warning labels to the posts of ads of politicians, despite their clear role in spreading false information.

While fact-checking organisations continue to fact-check politicians’ statements in their regular work, Meta has rebuffed calls to include them in its ratings system. To do so would have a significant effect, said Cvjeticanin of Raskrinkavanje.ba.

“Statements from political actors often reach more people than other types of content, especially if the actors in question are skilled at using social media,” she told BIRN.

“If these statements contain false claims, particularly dangerous ones that promote conspiracy theories like QAnon and such, it’s important that people who see or hear them are also able to see the facts and accurate information about those claims.”

Meta justifies its approach as “grounded in its fundamental belief in free expression, respect for the democratic process.”

“Especially in mature democracies with a free press, political speech is the most scrutinised speech there is,” the company says on its website. “Just as critically, by limiting political speech, we would leave people less informed about what their elected officials are saying and leave politicians less accountable for their words.”

Muric points out, however, that people in the Balkans do not live in ‘mature democracies’ with a free press.

“Raskrinkavanje.me currently does not have a special section or newsroom that would check politicians’ statements,” he said, “but we analyse media articles in which false claims of politicians are transmitted without verification, or when biased reporting favours facts, positions and conclusions that fit a certain narrative, often without respecting the rule of contacting the other side.”

Azem Kurtic based in Sarajevo, Bosnia and Herzegovina contributed to this article.